Part 2 in our series on Here’s What a Network Needs After a Cloud Migration. Part 1 looked at how to redesign the LAN.

When a company’s application infrastructure moves to the cloud, a reliable Internet connection becomes mandatory. Hiccups in Internet service that might have been an inconvenience when apps were in-house now grind the business to a halt.

Unfortunately, the Internet link happens to be the single least reliable element in an IT infrastructure. So here’s what you need to do to make sure you’re minimizing outages for your cloud-hosted clients.

Implement redundant links for reliability

I always recommend implementing dual redundant Internet links for organizations that have critical cloud application infrastructure.

Typical business Internet links have a committed service availability of 99.9%. This means they’re allowed (and expected) to be down 0.1% of the time. That doesn’t sound like much until you multiply it out: 0.1% is more than eight hours per year, which is either one full day or large chunks of several days.

And that’s just what’s expected and intended. What if there’s a fiber cut or a fire or—more likely—if some technician somewhere just makes a mistake? Your client could be out of service for much longer.

If the cost to your client’s business of a day or two per year of lost service is greater than the cost of a redundant link, then it clearly makes good business sense to implement a backup link. And if you have a service level agreement with your client that includes penalties, each outage at the ISP will look like your outage. This often results in a finger-pointing exercise that doesn’t benefit your business relationship with the client.

The edge firewall can be configured with two outside interfaces connecting to two different ISPs. There are two ways to do this.

Option A: Idle backup link

The first way is to simply set up a backup link that sits idle except when the primary link is unavailable. In many cases, it’s possible to get such a link at a reduced cost because the provider knows it won’t be used most of the time.

Then you’d configure the edge firewall to use the primary link if it’s available, and to automatically switch over to the other link if the first one goes down. Many commercial firewalls can do this quite easily.

If you implement such a setup, it’s important to periodically test the backup link. If you aren’t using it regularly, the backup link could go down without you noticing, and then be unavailable when you need it.

Alternatively, the edge firewall can be configured to do NAT on both interfaces using addresses supplied by the ISPs. In this scenario, it should be configured to split routing to the Internet between these two interfaces. In this way, both links are used although the load sharing will never be very even.

Option B: Routers between the ISP and firewall

The other way of handling a backup Internet link is much more complicated, but it allows you to host services such as remote VPN access as well as web servers and email gateways within the infrastructure. Note that if the client’s cloud implementation includes an outsource of all email and office automation services including file storage, then your client might never need remote access to their infrastructure, in which case this option is unnecessary.

This method involves putting a router (or better still, a pair of routers) between the ISPs and the client’s edge firewall. You should have at least two ISPs to do this properly.

The Internet-facing router exchanges BGP with the ISP’s routers. BGP allows you to present the same IP addresses to the Internet through either link. (Presenting the same IP address is only important when you have IP-addressed services that must be accessible inbound from the Internet.) A BGP configuration also allows you to load-share dynamically between the two links.

One final note on Internet reliability: I always recommend deploying dual redundant edge firewalls. The firewall itself is probably less likely to fail than the Internet circuit, but firewalls can and do fail, and they are another absolutely critical piece of cloud infrastructure.

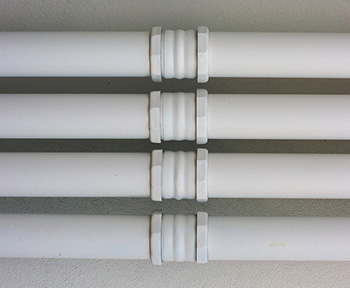

I connect the “inside” and “outside” interfaces of the primary firewall to different switches than the secondary firewall. Build a “ladder” of connections: BGP router number 1 connects to external switch number 1, which connects to the external interface of firewall number 1; The internal interface of firewall number 1 connects to internal switch number 1.

Then have a completely separate line of connections for BGP router number 2, external switch number 2, and firewall number 2. And the switches at each level cross-connect to one another. This way, if any one device fails, all traffic can migrate transparently to the secondary path without interruption.

Carefully monitor Internet capacity

In addition to the reliability of the Internet link, you also need to worry about, and monitor, its capacity. The first symptom of an over-used Internet link will be randomly dropped packets. At a network level, this causes both workstations and servers to back off and send data more slowly. The user experience immediately suffers.

Unfortunately, just looking at metrics like 5-minute average data throughput rates won’t tell you that you’re dropping packets. A 100Mbps link can transmit over 8000 packets per second at the largest packet size of 1500 bytes. That’s eight packets per millisecond.

If it tries to send more than that, some of the packets will be buffered. Usually the buffer is large enough to hold several packets. But it’s important to understand that this all happens in milliseconds. So a burst of traffic that’s far too short to ever be seen on a network utilization graph can cause real application performance issues.

To make matters worse, the amount of data going up to the cloud from a typical web-based application is far smaller than the amount the cloud is sending down. This means the most likely place for packets to be dropped is at the client’s ISP. So you probably won’t see anything registering in the dropped packet counters on the edge firewall, even if packets are dropping in large numbers.

The easy answer to the problem is to ensure you have considerable excess capacity on the inbound side of the Internet link. However, you can infer that performance problems might be caused by Internet link congestion by looking at the times when the performance problems occur and seeing if they’re correlated with times when inbound traffic load is high. But always bear in mind that there could be real congestion problems even though the average numbers are well below 100% link utilization.

In the final post of this series, I’ll take a look at best practices for managing user access and authentication in a client that has migrated to the cloud .